人工智能的浪潮正在席卷全球。一个已经被谈论了几十年的概念,如今这几年,相关技术的发展速度越来越快。机器学习、深度学习、计算机视觉等名词逐渐走进人们的生活,它们同属于人工智能的范畴之中。

计算机视觉是人工智能领域的一个分支计算机视觉实际上是一个跨领域的交叉学科,包括计算机科学,数学,工程学,物理学,生物学和心理学等领域。许多科学家认为,计算机视觉为人工智能的发展开拓了道路。

简单来说,计算机视觉就是赋予计算机一双观察世界的眼睛,再使用计算机优秀的大脑快速的计算,服务人类。

今天我们将深入浅出,简单介绍Python/ target=_blank class=infotextkey>Python计算机视觉中的手势识别方法,识别手势——数字(一、二、三、四、五和大拇指的赞赏)。

前期准备

本篇我们将会用到Python的OpenCV模块和手部模型模块mediapipe,在Python的pip安装方法中,安装方法如下:

opencv是常用的图像识别模块

mediapipe是谷歌开发并开源的多媒体机器学习模型应用框架。

pip install opencv-python

pip install mediapipe

如果你的电脑装有Anaconda,建议选择在Anaconda的环境命令行中进行相应模块的安装,以此构建更具体的机器学习环境

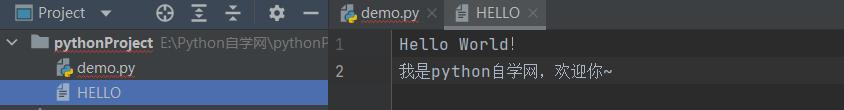

当你安装好OpenCV和mediapipe模块以后,你可以在Python代码中写入

import cv2

import mediapipe as mp

如果运行成功,那么你的Opencv-python模块即为安装成功,那么我们现在就开始进入今天的正题吧!

识别手部模型

既然要做手势识别,那么就要去找到我们传入图像的手部信息。本处我们将使用mediapipe模型去找到手部模型,并完成手部模型的识别模块,并命名,我们将在后续手势识别内容中将其作为模块引入

HandTrackingModule.py

# -*- coding:utf-8 -*-

"""

CODE >>> SINCE IN CAIXYPROMISE.

MOTTO >>> STRIVE FOR ExcelLENT.

CONSTANTLY STRIVING FOR SELF-IMPROVEMENT.

@ By: CaixyPromise

@ Date: 2021-10-17

"""

import cv2

import mediapipe as mp

class HandDetector:

"""

使用mediapipe库查找手。导出地标像素格式。添加了额外的功能。

如查找方式,许多手指向上或两个手指之间的距离。而且提供找到的手的边界框信息。

"""

def __init__(self, mode=False, maxHands=2, detectionCon=0.5, minTrackCon=0.5):

"""

:param mode: 在静态模式下,对每个图像进行检测

:param maxHands: 要检测的最大手数

:param detectionCon: 最小检测置信度

:param minTrackCon: 最小跟踪置信度

"""

self.mode = mode

self.maxHands = maxHands

self.detectionCon = detectionCon

self.minTrackCon = minTrackCon

self.mpHands = mp.solutions.hands

self.hands = self.mpHands.Hands(self.mode, self.maxHands,

self.detectionCon, self.minTrackCon)

self.mpDraw = mp.solutions.drawing_utils

self.tipIds = [4, 8, 12, 16, 20]

self.fingers = []

self.lmList = []

def findHands(self, img, draw=True):

"""

从图像(BRG)中找到手部。

:param img: 用于查找手的图像。

:param draw: 在图像上绘制输出的标志。

:return: 带或不带图形的图像

"""

imgRGB = cv2.cvtColor(img, cv2.COLOR_BGR2RGB) # 将传入的图像由BGR模式转标准的Opencv模式——RGB模式,

self.results = self.hands.process(imgRGB)

if self.results.multi_hand_landmarks:

for handLms in self.results.multi_hand_landmarks:

if draw:

self.mpDraw.draw_landmarks(img, handLms,

self.mpHands.HAND_CONNECTIONS)

return img

def findPosition(self, img, handNo=0, draw=True):

"""

查找单手的地标并将其放入列表中像素格式。还可以返回手部周围的边界框。

:param img: 要查找的主图像

:param handNo: 如果检测到多只手,则为手部id

:param draw: 在图像上绘制输出的标志。(默认绘制矩形框)

:return: 像素格式的手部关节位置列表;手部边界框

"""

xList = []

yList = []

bbox = []

bboxInfo =[]

self.lmList = []

if self.results.multi_hand_landmarks:

myHand = self.results.multi_hand_landmarks[handNo]

for id, lm in enumerate(myHand.landmark):

h, w, c = img.shape

px, py = int(lm.x * w), int(lm.y * h)

xList.Append(px)

yList.append(py)

self.lmList.append([px, py])

if draw:

cv2.circle(img, (px, py), 5, (255, 0, 255), cv2.FILLED)

xmin, xmax = min(xList), max(xList)

ymin, ymax = min(yList), max(yList)

boxW, boxH = xmax - xmin, ymax - ymin

bbox = xmin, ymin, boxW, boxH

cx, cy = bbox[0] + (bbox[2] // 2),

bbox[1] + (bbox[3] // 2)

bboxInfo = {"id": id, "bbox": bbox,"center": (cx, cy)}

if draw:

cv2.rectangle(img, (bbox[0] - 20, bbox[1] - 20),

(bbox[0] + bbox[2] + 20, bbox[1] + bbox[3] + 20),

(0, 255, 0), 2)

return self.lmList, bboxInfo

def fingersUp(self):

"""

查找列表中打开并返回的手指数。会分别考虑左手和右手

:return:竖起手指的数组(列表),数组长度为5,

其中,由大拇指开始数,立起标为1,放下为0。

"""

if self.results.multi_hand_landmarks:

myHandType = self.handType()

fingers = []

# Thumb

if myHandType == "Right":

if self.lmList[self.tipIds[0]][0] > self.lmList[self.tipIds[0] - 1][0]:

fingers.append(1)

else:

fingers.append(0)

else:

if self.lmList[self.tipIds[0]][0] < self.lmList[self.tipIds[0] - 1][0]:

fingers.append(1)

else:

fingers.append(0)

# 4 Fingers

for id in range(1, 5):

if self.lmList[self.tipIds[id]][1] < self.lmList[self.tipIds[id] - 2][1]:

fingers.append(1)

else:

fingers.append(0)

return fingers

def handType(self):

"""

检查传入的手部是左还是右

:return: "Right" 或 "Left"

"""

if self.results.multi_hand_landmarks:

if self.lmList[17][0] < self.lmList[5][0]:

return "Right"

else:

return "Left"

识别视频输入方法

完成手部模型的获取与识别,现在我们就要将内容传入到计算机当中,使其能进行手部的识别以及手势的识别。本处我们将使用OpenCV进行内容的输入流,开启计算机的摄像头获取内容,并使用刚刚我们写的HandTrackingModule模块作为手部的识别模块。

Main.py

# -*- coding:utf-8 -*-

"""

CODE >>> SINCE IN CAIXYPROMISE.

MOTTO >>> STRIVE FOR EXCELLENT.

CONSTANTLY STRIVING FOR SELF-IMPROVEMENT.

@ By: CaixyPromise

@ Date: 2021-10-17

"""

import cv2

from HandTrackingModule import HandDetector

class Main:

def __init__(self):

self.camera = cv2.VideoCapture(0,cv2.CAP_DSHOW) # 以视频流传入

self.camera.set(3, 1280) # 设置分辨率

self.camera.set(4, 720)

def Gesture_recognition(self):

while True:

self.detector = HandDetector()

frame, img = self.camera.read()

img = self.detector.findHands(img) # 找到你的手部

lmList, bbox = self.detector.findPosition(img) # 获取你手部的方位

cv2.imshow("camera", img)

if cv2.getWindowProperty('camera', cv2.WND_PROP_VISIBLE) < 1:

break

# 通过关闭按钮退出程序

cv2.waitKey(1)

# if cv2.waitKey(1) & 0xFF == ord("q"):

# break # 按下q退出

现在,当我们运行程序后,程序会运行你的计算机默认摄像头,当你露出你的手时,会传出图像圈住你的手部,并且绘制出你的手部主要关节点。

其中,你的手部主要关节点已经标好序号,你的手部分为了21个关节点,指尖分别为4 8 12 16 20

具体关节分为:

手势识别方法

通过前面的讲解,我们完成了手部获取与识别、识别内容的输入,那么我们现在就来开始写我们的手势识别方法。这里,我们用到识别模块里的fingersUp()方法。

找到我们刚刚写的Main.py文件(识别内容输入方法),当我们找到并绘制出我们的手部位置以后,此时的findPosition()方法会得到你的手部具体方位,其中lmList是关节位置方位(type:list),bbox是边框方位(type:dict),当未识别到内容时两者均为空。所以,我们只需要写当数组中存在数据(非空),进行手指判断即可,那么我们可以写成

# -*- coding:utf-8 -*-

"""

CODE >>> SINCE IN CAIXYPROMISE.

MOTTO >>> STRIVE FOR EXCELLENT.

CONSTANTLY STRIVING FOR SELF-IMPROVEMENT.

@ By: CaixyPromise

@ Date: 2021-10-17

"""

def Gesture_recognition(self):

while True:

self.detector = HandDetector()

frame, img = self.camera.read()

img = self.detector.findHands(img)

lmList, bbox = self.detector.findPosition(img)

if lmList:

x1, x2, x3, x4, x5 = self.detector.fingersUp()

上面我们fingersUp()方法谈到,fingersUp()方法会传回从大拇指开始数的长度为5的数组,立起的手指标记为1,放下标记为0。

本次我们的目的是写一个识别我们生活常见的数字手势以及一个赞扬大拇指的手势。结合我们生活,识别你的手势可以写为

# -*- coding:utf-8 -*-

"""

CODE >>> SINCE IN CAIXYPROMISE.

MOTTO >>> STRIVE FOR EXCELLENT.

CONSTANTLY STRIVING FOR SELF-IMPROVEMENT.

@ By: CaixyPromise

@ Date: 2021-10-17

"""

def Gesture_recognition(self):

while True:

self.detector = HandDetector()

frame, img = self.camera.read()

img = self.detector.findHands(img)

lmList, bbox = self.detector.findPosition(img)

if lmList:

x1, x2, x3, x4, x5 = self.detector.fingersUp()

if (x2 == 1 and x3 == 1) and (x4 == 0 and x5 == 0 and x1 == 0):

# TWO

elif (x2 == 1 and x3 == 1 and x4 == 1) and (x1 == 0 and x5 == 0):

# THREE

elif (x2 == 1 and x3 == 1 and x4 == 1 and x5 == 1) and (x1 == 0):

# FOUR

elif x1 == 1 and x2 == 1 and x3 == 1 and x4 == 1 and x5 == 1:

# FIVE

elif x2 == 1 and (x1 == 0, x3 == 0, x4 == 0, x5 == 0):

# ONE

elif x1 and (x2 == 0, x3 == 0, x4 == 0, x5 == 0):

# NICE_GOOD

完成基本的识别以后,我们要把内容表达出来。这里我们结合bbox返回的手部方框方位,再使用opencv里的putText方法,实现识别结果的输出。

# -*- coding:utf-8 -*-

"""

CODE >>> SINCE IN CAIXYPROMISE.

MOTTO >>> STRIVE FOR EXCELLENT.

CONSTANTLY STRIVING FOR SELF-IMPROVEMENT.

@ By: CaixyPromise

@ Date: 2021-10-17

"""

def Gesture_recognition(self):

while True:

self.detector = HandDetector()

frame, img = self.camera.read()

img = self.detector.findHands(img)

lmList, bbox = self.detector.findPosition(img)

if lmList:

x_1, y_1 = bbox["bbox"][0], bbox["bbox"][1]

x1, x2, x3, x4, x5 = self.detector.fingersUp()

if (x2 == 1 and x3 == 1) and (x4 == 0 and x5 == 0 and x1 == 0):

cv2.putText(img, "2_TWO", (x_1, y_1), cv2.FONT_HERSHEY_PLAIN, 3,

(0, 0, 255), 3)

elif (x2 == 1 and x3 == 1 and x4 == 1) and (x1 == 0 and x5 == 0):

cv2.putText(img, "3_THREE", (x_1, y_1), cv2.FONT_HERSHEY_PLAIN, 3,

(0, 0, 255), 3)

elif (x2 == 1 and x3 == 1 and x4 == 1 and x5 == 1) and (x1 == 0):

cv2.putText(img, "4_FOUR", (x_1, y_1), cv2.FONT_HERSHEY_PLAIN, 3,

(0, 0, 255), 3)

elif x1 == 1 and x2 == 1 and x3 == 1 and x4 == 1 and x5 == 1:

cv2.putText(img, "5_FIVE", (x_1, y_1), cv2.FONT_HERSHEY_PLAIN, 3,

(0, 0, 255), 3)

elif x2 == 1 and (x1 == 0, x3 == 0, x4 == 0, x5 == 0):

cv2.putText(img, "1_ONE", (x_1, y_1), cv2.FONT_HERSHEY_PLAIN, 3,

(0, 0, 255), 3)

elif x1 and (x2 == 0, x3 == 0, x4 == 0, x5 == 0):

cv2.putText(img, "GOOD!", (x_1, y_1), cv2.FONT_HERSHEY_PLAIN, 3,

(0, 0, 255), 3)

cv2.imshow("camera", img)

if cv2.getWindowProperty('camera', cv2.WND_PROP_VISIBLE) < 1:

break

cv2.waitKey(1)

现在,我们已经完成手势的识别与结果输出,我们把完整代码运行一下,即可验证出我们的代码效果。

完整代码如下

# -*- coding:utf-8 -*-

"""

CODE >>> SINCE IN CAIXYPROMISE.

STRIVE FOR EXCELLENT.

CONSTANTLY STRIVING FOR SELF-IMPROVEMENT.

@ by: caixy

@ date: 2021-10-1

"""

import cv2

from HandTrackingModule import HandDetector

class Main:

def __init__(self):

self.camera = cv2.VideoCapture(0,cv2.CAP_DSHOW)

self.camera.set(3, 1280)

self.camera.set(4, 720)

def Gesture_recognition(self):

while True:

self.detector = HandDetector()

frame, img = self.camera.read()

img = self.detector.findHands(img)

lmList, bbox = self.detector.findPosition(img)

if lmList:

x_1, y_1 = bbox["bbox"][0], bbox["bbox"][1]

x1, x2, x3, x4, x5 = self.detector.fingersUp()

if (x2 == 1 and x3 == 1) and (x4 == 0 and x5 == 0 and x1 == 0):

cv2.putText(img, "2_TWO", (x_1, y_1), cv2.FONT_HERSHEY_PLAIN, 3,

(0, 0, 255), 3)

elif (x2 == 1 and x3 == 1 and x4 == 1) and (x1 == 0 and x5 == 0):

cv2.putText(img, "3_THREE", (x_1, y_1), cv2.FONT_HERSHEY_PLAIN, 3,

(0, 0, 255), 3)

elif (x2 == 1 and x3 == 1 and x4 == 1 and x5 == 1) and (x1 == 0):

cv2.putText(img, "4_FOUR", (x_1, y_1), cv2.FONT_HERSHEY_PLAIN, 3,

(0, 0, 255), 3)

elif x1 == 1 and x2 == 1 and x3 == 1 and x4 == 1 and x5 == 1:

cv2.putText(img, "5_FIVE", (x_1, y_1), cv2.FONT_HERSHEY_PLAIN, 3,

(0, 0, 255), 3)

elif x2 == 1 and (x1 == 0, x3 == 0, x4 == 0, x5 == 0):

cv2.putText(img, "1_ONE", (x_1, y_1), cv2.FONT_HERSHEY_PLAIN, 3,

(0, 0, 255), 3)

elif x1 and (x2 == 0, x3 == 0, x4 == 0, x5 == 0):

cv2.putText(img, "GOOD!", (x_1, y_1), cv2.FONT_HERSHEY_PLAIN, 3,

(0, 0, 255), 3)

cv2.imshow("camera", img)

if cv2.getWindowProperty('camera', cv2.WND_PROP_VISIBLE) < 1:

break

cv2.waitKey(1)

# if cv2.waitKey(1) & 0xFF == ord("q"):

# break

if __name__ == '__main__':

Solution = Main()

Solution.Gesture_recognition()

效果一目了然,计算机成功识别了你的手势并把内容输出。快去试试吧!