LLMs 中 生成概率选择算法Greedy Search 、Beam Search解析

回到条件概率语言模型建模:

序列生成条件概率建模

即在当前类GPT 模型建模训练过程中,最终学习到的是

的一个条件概率分布,那么在已知

的一个条件概率分布,那么在已知  的情形下如何选择

的情形下如何选择 , 这就是本文需要讨论的LLMs 中生成概率选择的两种算法:Greedy Search 和 Beam Search

, 这就是本文需要讨论的LLMs 中生成概率选择的两种算法:Greedy Search 和 Beam Search

1.Greedy Search 贪婪搜索算法

Greedy Search 搜索算法思路较简单,依据名称即,每次都选择最大的概率 时候的

时候的 :

:

下面给出一个案例:

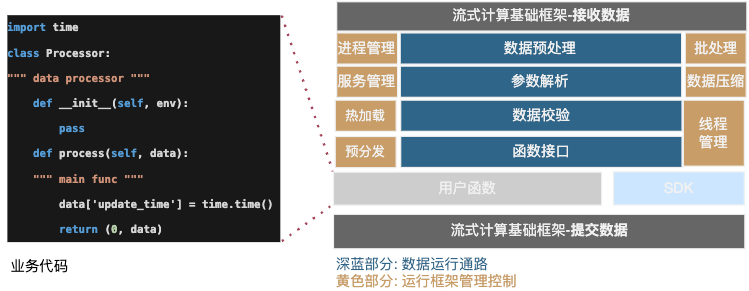

Greedy Search

上图显示The 开始以最大概率往后查找,得到 (The,nice, woman)系列(图中红线);明显贪婪搜索算法并不能保证这个生成的序列是一个全局概率最大序列(就是在一个相对较低的词后面,可能有一个较高概率的词),而且生成一段序列之后,将会重复自己(这部分将在gpt2-xl)模型进行展示。为了环境忽略一个较低词后面的较高概率的词,Beam Search 登场了。

2.Beam Search

Beam Search 是对Greedy Search 搜索算法的改进,假设num_beams =k,即每次计算top k 个下一个单词的最大序列,进行搜索,通过计算换取时间的方式

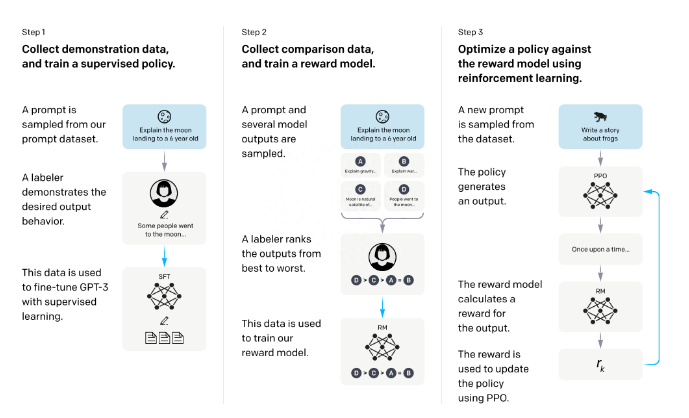

下面给出Beam Search 的一个案例, 取k=2:

beam search

("The","nice") -> 0.5

("The","dog") -> 0.4

("The","dog", "has") -> 0.4 * 0.9 =0.45

("The","nice", "woman") -> 0.5 * 0.40 =0.20

即每次查看Top 2 个序列,不断进行扩展,最后选择最大的序列输出。Beam search 算法缓解了当前词后面的词是一个较大概率的问题,但是也不能获取全局最大概率序列。

下面作者将对huggingface 中,gpt2-xl 模型,给出generate 方法在这些生成策略算法选择配置上不同情形下,观察输出结果差异,具体代码如下:

# -*- coding: utf-8 -*-

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

device = "cuda:0" if torch.cuda.is_avAIlable() else "cpu"

n_steps = 256

num_beams = 5

class GPT2:

def __init__(self, model_name: str = "gpt2-xl"):

self.tokenizer = AutoTokenizer.from_pretrained(model_name)

self.model = AutoModelForCausalLM.from_pretrained(model_name).to(device)

def greedy_search_generate(self, input_text: str):

input_ids = self.tokenizer(input_text, return_tensors="pt")["input_ids"].to(device)

output = self.model.generate(

input_ids,

pad_token_id=50256,

max_length=n_steps,

no_repeat_ngram_size=2,

early_stopping=True)

output = self.tokenizer.decode(output[0], skip_special_tokens=True)

return output

def beam_search(self, input_text: str):

input_ids = self.tokenizer(input_text, return_tensors="pt")["input_ids"].to(device)

output = self.model.generate(

input_ids,

max_length=n_steps,

pad_token_id=50256,

no_repeat_ngram_size=2,

num_beams=num_beams,

early_stopping=True

)

output = self.tokenizer.decode(output[0], skip_special_tokens=True)

return output

if __name__ == "__main__":

input_text = "I enjoy walking with my cute dog"

gpt = GPT2()

print("greed_search:n", gpt.greedy_search_generate(input_text))

print("beam search:n", gpt.beam_search(input_text))

代码运行结果:

greed_search:

I enjoy walking with my cute dog, and I love to read. I'm a big fan of the Harry Potter series, but I also enjoy the Lord of The Rings, the Hunger Games, The Hobbit, etc.

I'm not a fan, however, of any of those books that are set in the Middle Ages. The only one I've read is The Lord Of The Ring, which I think is a great book, though I don't think it's a good book for children. It's not that I dislike the books, I just don

.

My favorite book is the one that is set during the time of Jesus Christ. That's the book that has the most impact on me. My favorite character is Jesus. He's my favorite because he's so good. His character, his actions, everything about him is so perfect. And I like to think that he is my role model. So I try to be like him. But I can't be. Because I am not like Jesus, because I have a different character. A different personality. One that's more like me, one who is more of a normal person.

beam search:

I enjoy walking with my cute dog, but I don't want to walk with him in the rain."

"I'm not sure if it's a good idea to take my dog to the park," said one woman. "I think it would be better if I just let him run around the neighborhood with the other dogs."

从测试的输出结果看,个人 感觉 Beam Search 生成质量略高。

I enjoy walking with my cute dog, but I don't want to walk with him in the rain."

"I'm not sure if it's a good idea to take my dog to the park," said one woman. "I think it would be better if I just let him run around the neighborhood with the other dogs."