保姆式教程带大家爬取高清图片

2020-09-09

加入收藏

有些日子没写爬虫了,今日心血来潮,来写写,但也不知道爬啥,于是随便找了个网站试试手。

唯美女生

一、环境搭建

本爬虫使用Scrapy框架进行爬取

scrapy startproject Weimei

cd Weimeiscrapy genspider weimei "weimei.com"

修改settings.py文件

设置文件下载路径

编写启动文件start.py

from scrapy import cmdline

cmdline.execute("scrapy crawl weimei".split())

二、网页分析

今日就先爬个摄影写真吧,

该网页是个典型的瀑布流网页

所以我们有两种处理办法

- 使用selenium操控浏览器滑轮,然后获取整个页面的源码

- 在网页向下滑动时,查看发送的请求,根据请求来进行爬取(相当于滑动的效果)

我使用的时第二种

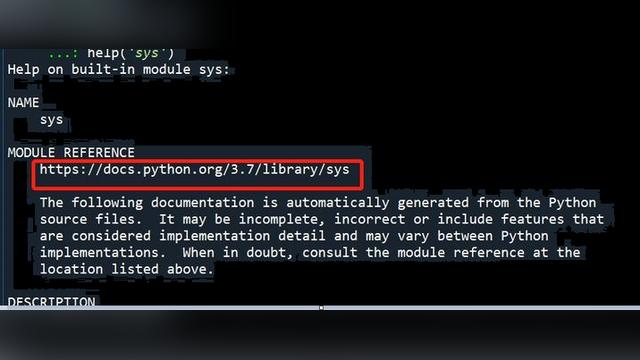

可以看到,向下滑动,浏览器发出ajax请求

请求方式为POST

带有参数

可以推断出,paged相当于页面页数,既然如此,只要把paged改为1,就相当于第一页,通过修改paged反复请求可以实现多页爬取

三、代码分析

weimei.py

class WeimeiSpider(scrapy.Spider):

name = 'weimei'

# allowed_domains = ['vmgirls.com']

# start_urls = ['https://www.vmgirls.com/photography']

#post请求提交的数据,可见网页分析

data = {

"Append": "list - archive",

"paged": "1", #当前页数

"action": "ajax_load_posts",

"query": "17",

"page": "cat"

} #重写start_requests def start_requests(self):

#进行多页爬取,range(3) -> 0,1,2

for i in range(3):

#设置爬取的当前页数 #range是从0开始

self.data["paged"] = str(1 + i)

#发起post请求 yield scrapy.FormRequest(url="https://www.vmgirls.com/wp-admin/admin-ajax.php", method='POST',

formdata=self.data, callback=self.parse)

def parse(self, response):

#使用BeautifulSoup的lxml库解析

bs1 = BeautifulSoup(response.text, "lxml")

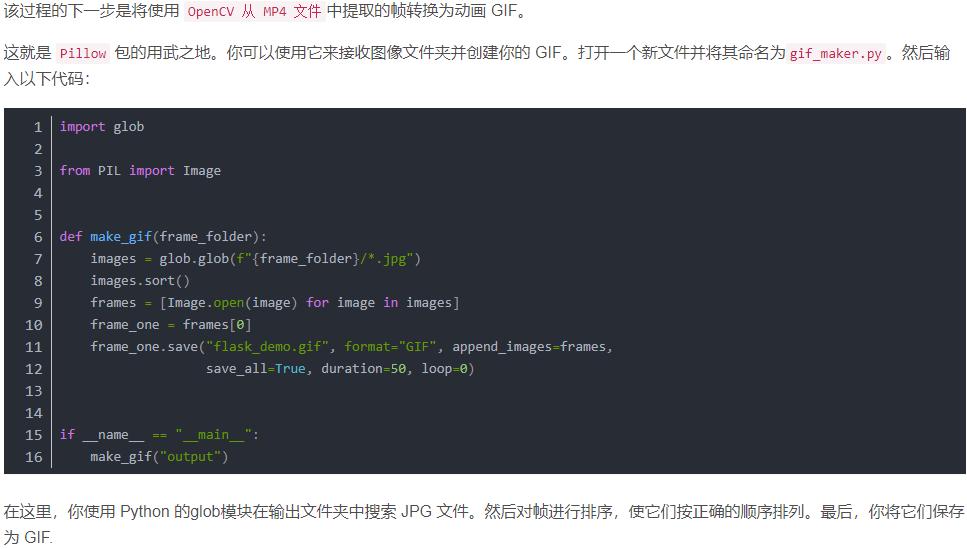

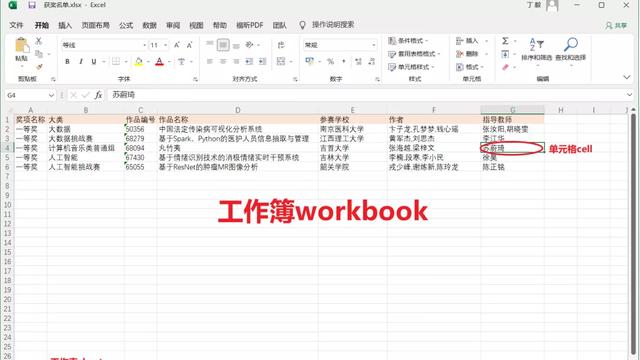

#图1

div_list = bs1.find_all(class_="col-md-4 d-flex")

#遍历 for div in div_list:

a = BeautifulSoup(str(div), "lxml")

#参考图2

#详情页Url

page_url = a.find("a")["href"]

#每一份摄影写真名称 name = a.find("a")["title"]

#发送get请求,去请求详情页,带上name参数

yield scrapy.Request(url=page_url,callback=self.page,meta = {"name": name})

#详情页爬取 def page(self,response):

#拿到传过来的name参数 name = response.meta.get("name")

bs2 = BeautifulSoup(response.text, "lxml")

#参考图3

img_list = bs2.find(class_="nc-light-gallery").find_all("img")

for img in img_list:

image = BeautifulSoup(str(img), "lxml")

#图4

#注意,我拿取的时data-src不是src #data-src是html5的新属性,意思是数据来源。 #图片url img_url = "https://www.vmgirls.com/" + image.find("img")["data-src"]

item = WeimeiItem(img_url=img_url, name=name)

yield item

pipelines.py

class WeimeiPipeline(ImagesPipeline):

def get_media_requests(self, item, info):

img_url = item["img_url"]

name = item["name"]

yield scrapy.Request(url=img_url, meta={"name": name})

def file_path(self, request, response=None, info=None):

name = request.meta["name"]

img_name = request.url.split('/')[-1]

#拼接路径,使每个摄影写真系列的图片都存放于同意文件夹中

img_path = os.path.join(name,img_name)

# print(img_path)

return img_path # 返回文件名

def item_completed(self, results, item, info):

# print(item)

return item # 返回给下一个即将被执行的管道类

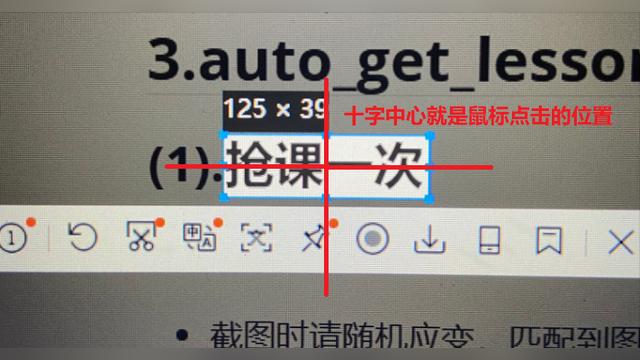

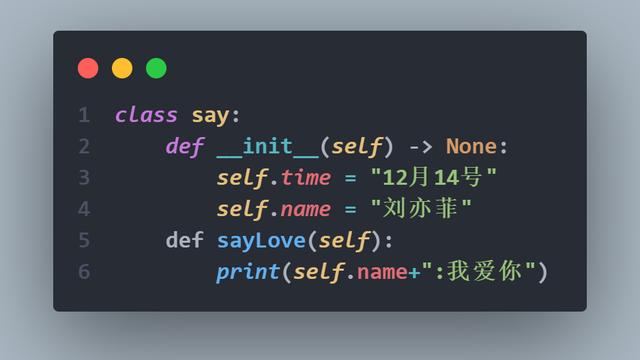

四、图片辅助分析

图1

图2

图3

图4

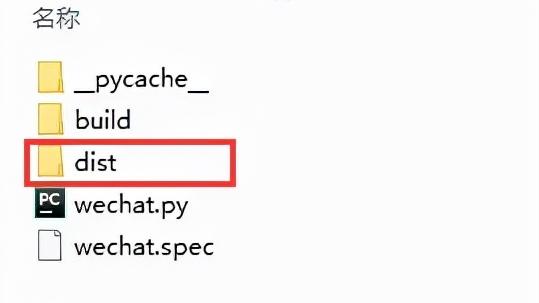

五、运行结果

六、完整代码

weimei.py

# -*- coding: utf-8 -*-

import scrapyfrom Weimei.items import WeimeiItemfrom bs4 import BeautifulSoupclass WeimeiSpider(scrapy.Spider): name = 'weimei'

# allowed_domains = ['vmgirls.com']

# start_urls = ['https://www.vmgirls.com/photography']

data = { "append": "list - archive",

"paged": "1",

"action": "ajax_load_posts",

"query": "17",

"page": "cat"

} def start_requests(self):

for i in range(3):

self.data["paged"] = str(int(self.data["paged"]) + 1)

yield scrapy.FormRequest(url="https://www.vmgirls.com/wp-admin/admin-ajax.php", method='POST',

formdata=self.data, callback=self.parse)

def parse(self, response):

bs1 = BeautifulSoup(response.text, "lxml")

div_list = bs1.find_all(class_="col-md-4 d-flex")

for div in div_list:

a = BeautifulSoup(str(div), "lxml")

page_url = a.find("a")["href"]

name = a.find("a")["title"]

yield scrapy.Request(url=page_url,callback=self.page,meta = {"name": name})

def page(self,response):

name = response.meta.get("name")

bs2 = BeautifulSoup(response.text, "lxml")

img_list = bs2.find(class_="nc-light-gallery").find_all("img")

for img in img_list:

image = BeautifulSoup(str(img), "lxml")

img_url = "https://www.vmgirls.com/" + image.find("img")["data-src"]

item = WeimeiItem(img_url=img_url, name=name) yield item

items.py

import scrapy

class WeimeiItem(scrapy.Item):

img_url = scrapy.Field()

name = scrapy.Field()

pipelines.py

from scrapy.pipelines.images import ImagesPipeline

import scrapyimport osclass WeimeiPipeline(ImagesPipeline): def get_media_requests(self, item, info):

img_url = item["img_url"]

name = item["name"]

print(img_url) yield scrapy.Request(url=img_url, meta={"name": name})

def file_path(self, request, response=None, info=None):

name = request.meta["name"]

img_name = request.url.split('/')[-1]

img_path = os.path.join(name,img_name) # print(img_path)

return img_path # 返回文件名

def item_completed(self, results, item, info):

# print(item)

return item # 返回给下一个即将被执行的管道类

settings.py

BOT_NAME = 'Weimei'

SPIDER_MODULES = ['Weimei.spiders']

NEWSPIDER_MODULE = 'Weimei.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'Weimei (+http://www.yourdomain.com)'

LOG_LEVEL = "ERROR"

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

"User-Agent": "Mozilla/5.0 (windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.89 Safari/537.36"

}

# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'Weimei.middlewares.WeimeiSpiderMiddleware': 543,

#}

# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# DOWNLOADER_MIDDLEWARES = {

# 'Weimei.middlewares.AreaSpiderMiddleware': 543,

# }

# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'Weimei.pipelines.WeimeiPipeline': 300,

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

IMAGES_STORE = "Download"

能搞定了把 如果不像敲代码,需要源代码后台私信小编 源码 即可获取